Introduction to Kubernetes

August 4, 2022 | 9 min ReadKubernetes

In this article, we’ll cover the essentials of Kubernetes, and all the basics you need to understand before diving in and integrating it with other solutions.

What is Kubernetes?

Kubernetes is a program that has and manages a large, fast-growing, and widely available ecosystem, thanks to its features:

- Laptop

- Extendable

- Open Source

- Manage workloads

- Container Services

- Declarative configuration

- Automation

Information

| Author: | Avinash Joe Beda, Brendan Burns, Craig McLuckie |

|---|---|

| Developer: | |

| Launch: | 2014 |

Kubernetes Components

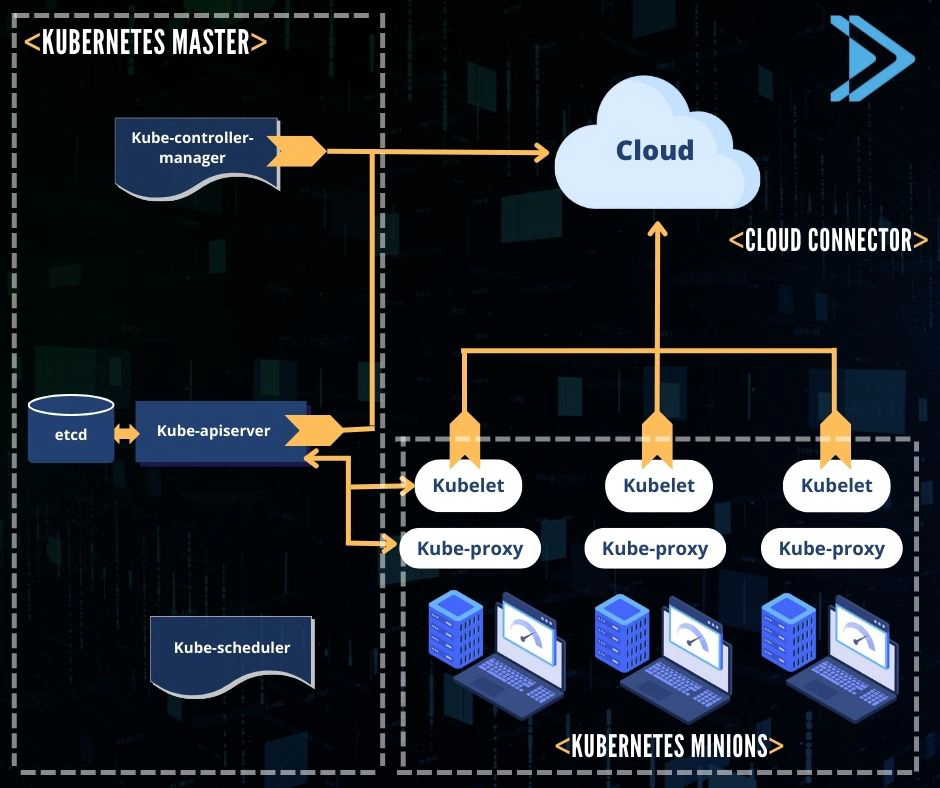

Architecture:

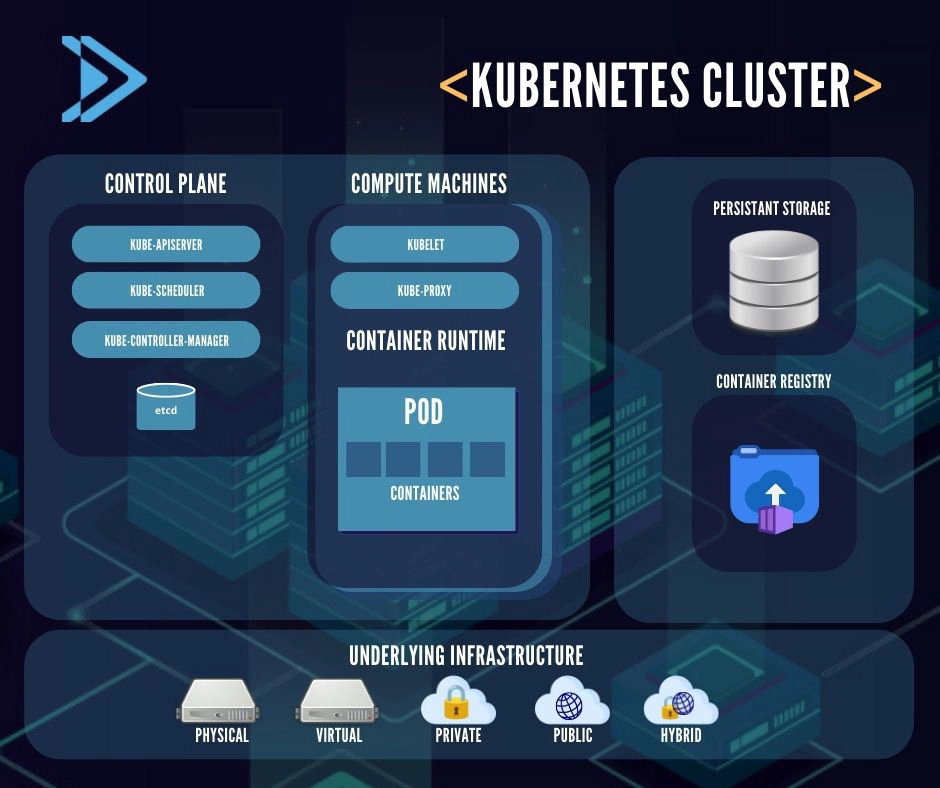

- A working Kubernetes deployment is called a cluster.

- You can view a Kubernetes cluster as two parts:

- The control plane.

- Computing machines or nodes.

- Each node is it is own SO(Linux) environment:

- It can be a physical or virtual machine.

- Each node runs pods, which are made up of containers.

Components:

Component kubernetes

- Nodes: A Kubernetes cluster needs at least one compute node, but will typically have many.

- Underlying infrastructure: The environment where you will run Kubernetes (servers). Control plane: In this section we find the Kubernetes components that control the cluster.

kube-apiserver: This is the front-end of the Kubernetes control plane, which determines if a request is valid to process it, you can access the API through:- REST calls.

- kubectl command line interface.

- Command line tools, such as kubeadm.

kube-scheduler: This indicates the resource needs of a pod next to the cluster.kube-controller-manager: The controllers are responsible for running and controlling the cluster.etcd: The configuration data and information about the state of the cluster are located in etcd (key-value storage). Compute machines:kubelet: Each compute node contains a kubelet, a small application that communicates with the control plane.kube-proxy: Each computes node also contains kube-proxy, this facilitates Kubernetes network services (inside or outside the cluster).Container runtime engine: To run the containers.Pods: A pod is the smallest and simplest unit of the Kubernetes object model. Persistent storage: Kubernetes can also manage application data attached to a cluster. Container registry: The container images that Kubernetes is based on are stored in a container registry.

Deploy a kubernetes cluster on AWS using EKS

What is EKS?

Amazon Elastic Kubernetes Service (Amazon EKS) is a managed container service for running and scaling Kubernetes applications in the cloud or on-premises.

Deploy an EKS cluster

To create an EKS cluster with cloud formation we must perform the following steps:

- Have an AWS account to be able to use their services.

- We must have prerequisites installed to be able to use and manage aws from the cli:

- aws-cli -eksctl

- kubectl

- aws-iam-autheticator

- We must have aws-cli configured with the aws configure command.

- Once the cli is configured, we export our account to be able to connect to the aws console.

$ export AWS_PROFILE=name

- After this step we must make the .yaml files to be able to do cloudformation, to be able to make them run and to be able to deploy our cluster:

$ touch cluster.yaml

$ touch deploy.yaml

- We enter the file:

$ vim cluster.yaml

We copy the following code into the cluster.yaml file:

apiVersion: eksctl.io/v1alpha5

kind:ClusterConfig

metadata:

name: allan-cluster

region: us-west-2

nodeGroups:

- name: node-1

instanceType: t2.micro

desiredCapacity: 2

volumeSize: 80

ssh:

publicKeyPath: ~/.ssh/id_rsa.pub

- name: node-2

instanceType: t2.micro

desiredCapacity: 2

volumeSize: 100

ssh:

publicKeyPath: ~/.ssh/id_rsa.pub

- We perform the deploy using the eks commands:

$ eksctl create cluster -f Cluster.yaml

This process takes between 15 and 25 minutes. 8. We enter the aws console to verify that our cluster is deployed in the EKS and cloudformation section. 9. We must apply the deploy to expose it in a nodeport: We create the following file:

$ touch deploy.yaml

We copy the following code to be able to apply the service deploy in nodeport:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-world-deployment

labels:

app: hello-world

spec:

selector:

matchLabels:

app: hello-world

replicas: 1

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: pvermeyden/nodejs-hello-world:a1e8cf1edcc04e6d905078aed9861807f6da0da4

ports:

-containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: hello-world

spec:

selector:

app: hello-world

ports:

-protocol: TCP

port: 80

targetPort: 80

nodePort: 30000

type: NodePort

We must apply the code using kubectl:

$ kubectl apply -f deploy,yaml

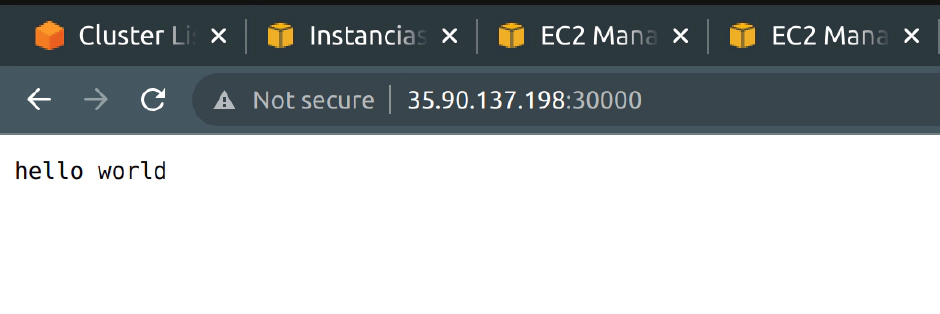

This takes 2 minutes. 10. We must enter the aws console again to be able to enable the sg to be able to see it from the browser.

- We enter the aws console.

- We’re looking for EC2.

- We see that servers belonging to the eks cluster are deployed. we touch the first one and go to the security section and we can see all the open ports, we edit and enable the sg of port 30000 for tcp for nodegroup-1 and we save it.

- We carry out the same step with the nodegroup-2.

- We go to the browser and enter the IP address: port as a search that will give us the following result:

Test of concept

Operating Kubernetes with kubectl

Kubernetes provides a command line tool to communicate with a Kubernetes cluster. control plane, using the Kubernetes API. Syntax: Use the following syntax to run kubectl commands from your terminal window:

kubectl [command] [TYPE] [NAME] [flags]

Where command, TYPE, NAME, and flags are:

command: Specifies the operation you want to perform on one or more resources, eg create, get, describe, delete.

TYPE: Specifies the type of resource.

kubectl get pod pod1

kubectl get pods pod1

kubectl get po pod1

NAME: Specifies the name of the resource.

flags: Specifies optional flags. For example, you can use the -s or –server flags to specify the Kubernetes API server address and port.

Features

- Service Discovery and load balancing: Provides a self-discovery system between containers and routing by assigning IP addresses or host names.

- Storage Orchestration: K8s can automatically mount the necessary storage system.

- Automatic deployments and rollbacks: For application updates, deployment policies can be defined so as not to have a service stoppage.

- Batch Execution: K8s can handle batch and CI workloads, replacing failed containers.

- Scheduling: Automatically places containers on the best nodes based on your requirements and other constraints, without sacrificing availability.

- Self-healing: Restarts containers that fail, replaces and reschedules containers when nodes die, removes containers that don’t respond to user-defined health-checks, and doesn’t present them to users until ready.

- Configuration and secrets management: Sensitive data such as tokens, API keys, ssh keys, passwords, etc. can be stored in Kubernetes.

- Scaling and auto-scaling: Allows you to scale applications manually or define rules to horizontally scale applications based on CPU usage.

Kubernetes objects

Kubernetes Objects are persistent entities within the Kubernetes system. Kubernetes uses these entities to represent the state of your cluster. Specifically, they can describe:

- Which applications run in containers (and on which nodes).

- The resources available for these applications.

- Policies about how those applications behave, such as restart, update, and fault tolerance policies. A Kubernetes object is an “intent record” once you have created the object, the Kubernetes system will kick in to ensure that the object exists. By creating an object, you are telling the Kubernetes system what you want your cluster’s workload to look like.

How to run applications on k8s?

There are different ways to deploy applications in Kubernetes, among the most used are:

- Run a stateless application using a deployment.

- Run an application with a single instance state.

- Run an application with the replicated state.

- Scale a StatefulSet.

- Delete a StatefulSet.

- Force removal of StatefulSet pods.

- Auto horizontal scaling of pods.

- HorizontalPodAutoscaler tutorial.

- Specification of an interruption budget for your application.

- Kubernetes API access from a pod. To deploy clusters and applications within Kubernetes we must know the following terms and concepts: Pod

- Pods are the smallest deployable units of computing that you can create and manage in Kubernetes.

- A pod is a group of one or more containers, with shared storage and network resources, and a specification on how to run the containers.

- Pods and controllers: You can use workload resources to create and manage multiple pods. Here are some examples of workload resources that are managed by one or more pods:

- Deployment -StatefulSet -DaemonSet Deployment

- A deployment provides declarative updates for ReplicaSets.

- Oneself describes the desired state in a deployment and the Deployment Controller.

- You can define deployments to create new replica sets or delete existing deployments. Service

- An abstract way of exposing an application running in a set of pods as a network service.

- Kubernetes gives pods their IP addresses and a single DNS name for a set of pods, and can load balance between pods.

- Motivation: Pods are non-permanent resources. If used to run your app, you can dynamically create and destroy Pods.

- Service Resources: Service is an abstraction that defines a logical set of Pods and a policy to access them.

The set of Pods targeted by a Service is generally determined by selector.

- Cloud-Native Service Discovery: Kubernetes API can be used for service discovery in your application. For non-native applications, Kubernetes offers ways to put a network port or load balancer between your application and the back-end pods.

- Definition of a service: A Service in Kubernetes is a REST object, similar to a Pod.

- Selectorless Services: The service can abstract other types of backends, including those running outside of the cluster. Replica set

- Maintains a stable set of replica pods running at any given time.

- A ReplicaSet is defined with fields, including a selector that specifies how to identify the Pods it can acquire.

- Many replicas indicate how many Pods to maintain.

- ReplicaSet fulfills its purpose by creating and removing Pods as needed to reach the desired number. Ingress

- An API object that handles external access to services in a cluster, typically HTTP.

- Ingress can provide load balancing, SSL termination, and name-based virtual hosting.

- The ingress exposes HTTP and HTTPS routes from outside the cluster to services inside the cluster. Traffic routing is controlled by rules defined on the Ingress resource.

Competitors

###Openshift While we’re back to talking a bit about Kubernetes, “Red Hat OpenShift Container Platform is an enterprise Kubernetes container platform, with end-to-end automated operations to manage multi cloud and hybrid cloud deployments.”

Docker Swarm

It is a tool built into Docker that allows you to group several Docker hosts into a cluster and manage them centrally, as well as orchestrate containers.

Docker Compose

It is a tool that simplifies the use of dockers from YAML files, in addition to the possibility of using it on Windows, unlike other container manager systems.

Cloud-based: GKE, ECS, EKS, AKS

Within the most important cloud providers AWS, Google Cloud Platform, and Azure, we can find the solutions they use for container orchestration:

- AWS: ECS, EKS, Fargate

- Google Cloud: GKE -Azure: AKS